论文成果 / Publications

2022

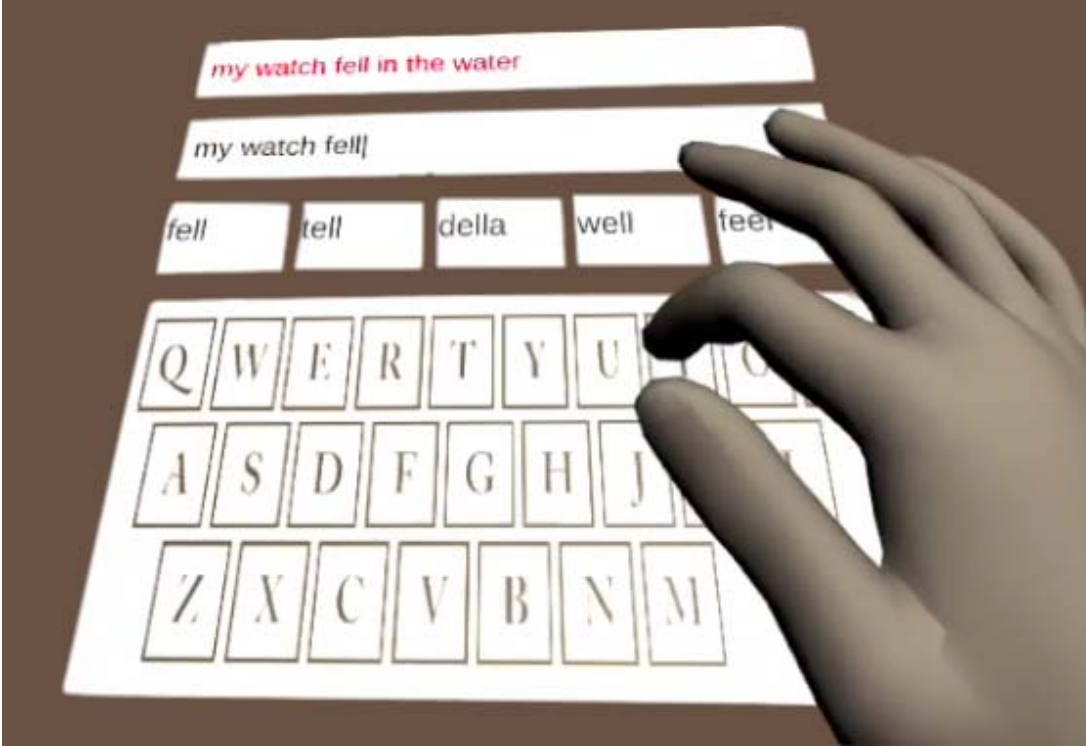

From 2D to 3D: Facilitating Single-Finger Mid-Air Typing on Virtual Keyboards with Probabilistic Touch Modeling

Abstract

Mid-air text entry on virtual keyboards suffers from the lack of tactile feedback, bringing challenges to both tap detection and input prediction. In this poster, we demonstrated the feasibility of efficient single-finger typing in mid-air through probabilistic touch modeling. We first collected users’ typing data on different sizes of virtual keyboards. Based on analyzing the data, we derived an input prediction algorithm that incorporated probabilistic touch detection and elastic probabilistic decoding. In the evaluation study where the participants performed real text entry tasks with this technique,they reached a pick-up single-finger typing speed of 24.0 WPM with 2.8% word-level error rate.

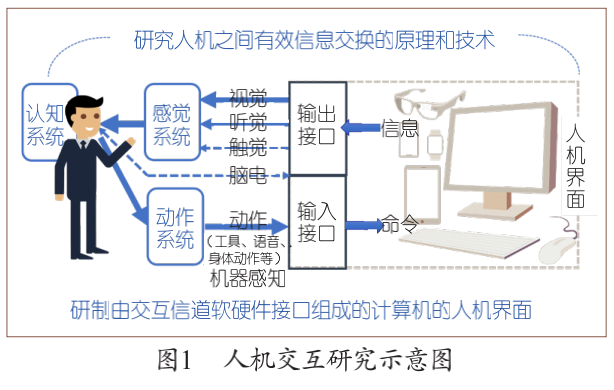

Communications of CCF | 做好人机交互研究

Abstract

人机交互(Human Computer Interactioan,HCI)研究人与计算机系统之间自然高效信息交换的原理与技术,实现为由多科模态的输入输出软硬件接口所构成的用户终端界面,形成特定的交互模式。如图1所示,接口分为用户输入数据处理的输入接口和机器处理结果反饿的输出接口。人的交互意图在脑中产生,今天的生命科学和脑电技术尚不能实现直接读脑写脑(图1中表示为虚线),交互意图需要通过外周神经系统下的行为动作表达出来,可以是操控工具,也可以是语音和动作的自然表达.输入接口的主要任务是捕捉和处理人的外在行为;机器处理结果的呈现要符合人的感知认知特点。

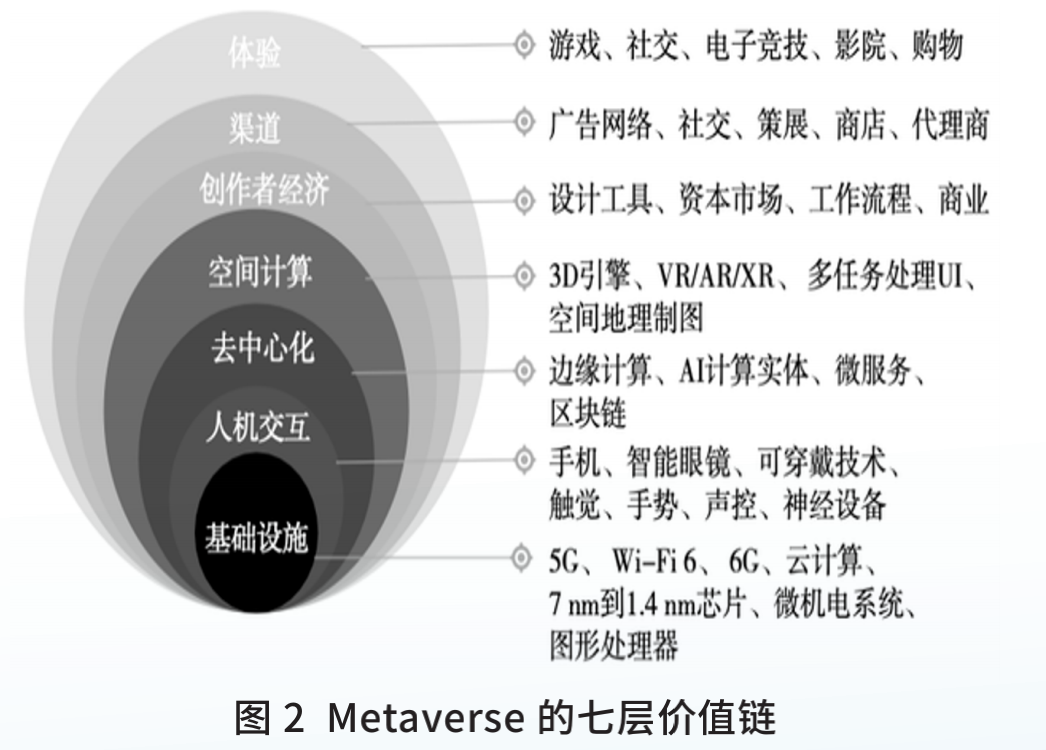

CAAI Communications|元宇宙需要人机交互的突破

Abstract

元宇宙目标实现万物的信息化和智能化,创造一个信息充分包围人的虚实融合空间,演化生成时空无界的新型社会形态。人机交互是元宇宙的核心关键技术,人机接口的扩展和虚拟化,实现人机之间高效交换语义信息技术挑战大。掌握人机交互科技优势,对推动相关产业发展有着至关重要的作用。本文分析元宇宙人机交互的挑战,重点探讨交互意图推理的突破思路与最新进展。

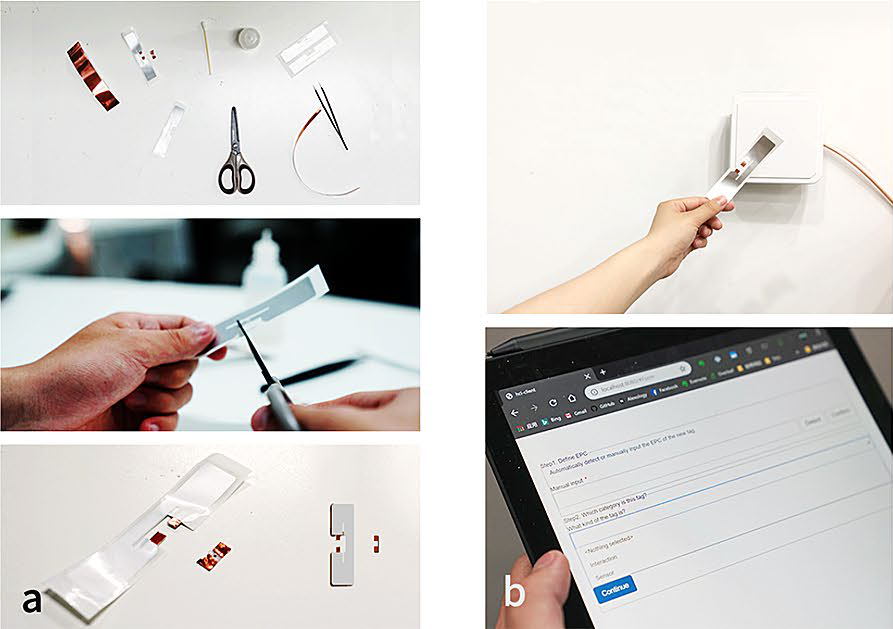

Easily‑add battery‑free wireless sensors to everyday objects: system implementation and usability study

Abstract

The trend of IoT brings more and more connected smart devices into our daily lives, which can enable a ubiquitous sensing and interaction experience. However, augmenting many everyday objects with sensing abilities is not easy. BitID is an unobtrusive, low-cost, training-free, and easy-to-use technique that enables users to add sensing abilities to everyday objects in a DIY manner. A BitID sensor can be easily made from a UHF RFID tag and deployed on an object so that the tag’s readability (whether the tag is identifed by RFID readers) is mapped to binary states of the object (e.g., whether a door is open or closed). To further validate BitID’s sensing performance, we use a robotic arm to press BitID buttons repetitively and swipe on BitID sliders. The average press recognition F1-score is 98.9% and the swipe recognition F1-score is 96.7%. To evaluate BitID’s usability, we implement a prototype system that supports BitID sensor registration, semantic defnition,

status display, and real-time state and event detection. Using the system, users confgured and deployed a BitID sensor with an average time duration of 4.9 min. 23 of the 24 users deployed BitID sensors worked accurately and robustly. In addition to the previously proposed ’short’ BitID sensor, we propose new ’open’ BitID sensors which show similar performance as ’short’ sensors.

2021

The practice of applying AI to benefit visually impaired people in China

Abstract

According to the China Disabled Persons'Federation(CDPF),there are now 17 million visually impaired people in China, among which three million are totally blind, while the others are low-visioned. In the past two decades, China has experienced tremendous development of information technology. Traditional industries are incorporating information technology, with services delivered to users through websites and mobile applications. It is positive technical progress that visually impaired people can access various services without leaving home; for example, they can order food delivery online or schedule a taxi from an appbased transportation service.

ReflecTrack: Enabling 3D Acoustic Position Tracking Using Commodity Dual-Microphone Smartphones

Abstract

3D position tracking on smartphones has the potential to unlock a variety of novel applications, but has not been made widely available due to limitations in smartphone sensors. In this paper, we propose ReflecTrack, a novel 3D acoustic position tracking method for commodity dual-microphone smartphones. A ubiquitous speaker (e.g., smartwatch or earbud) generates inaudible Frequency Modulated Continuous Wave (FMCW) acoustic signals that are picked up by both smartphone microphones. To enable 3D tracking with two microphones, we introduce a reflective surface that can be easily found in everyday objects near the smartphone. Thus, the microphones can receive sound from the speaker and echoes from the surface for FMCW-based acoustic ranging. To simultaneously estimate the distances from the direct and reflective paths, we propose the echo-aware FMCW technique with a new signal pattern and target detection process. Our user study shows that ReflecTrack achieves a median error of 28.4 mm in the 60cm × 60cm × 60cm space and 22.1 mm in the 30cm × 30cm × 30cm space for 3D positioning. We demonstrate the easy accessibility of ReflecTrack using everyday surfaces and objects with several typical applications of 3D position tracking, including 3D input for smartphones, fine-grained gesture recognition, and motion tracking in smartphone-based VR systems.

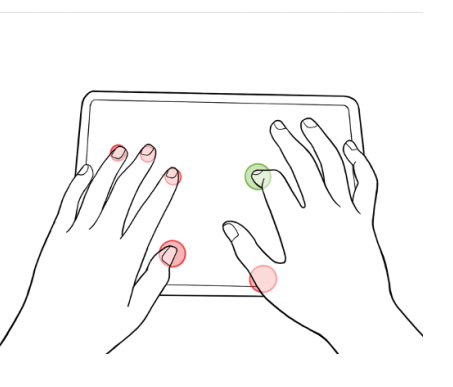

TypeBoard: Identifying Unintentional Touch on Pressure-Sensitive Touchscreen Keyboards

Abstract

Text input is essential in tablet computer interaction. However, tablet software keyboards face the problem of misrecognizing unintentional touch, which affects efficiency and usability. In this paper, we proposed TypeBoard, a pressure-sensitive touchscreen keyboard that prevents unintentional touches. The TypeBoard allows users to rest their fingers on the touchscreen, which changes the user behavior: on average, users generate 40.83 unintentional touches every 100 keystrokes. The TypeBoard prevents unintentional touch with an accuracy of 98.88%. A typing study showed that the TypeBoard reduced fatigue (p < 0.005) and typing errors (p < 0.01), and improved the touchscreen keyboard’ typing speed by 11.78% (p < 0.005). As users could touch the screen without triggering responses, we added tactile landmarks on the TypeBoard, allowing users to locate the keys by the sense of touch. This feature further improves the typing speed, outperforming the ordinary tablet keyboard by 21.19% (p < 0.001). Results show that pressure-sensitive touchscreen keyboards can prevent unintentional touch, improving usability from many aspects, such as avoiding fatigue, reducing errors, and mediating touch typing on tablets.

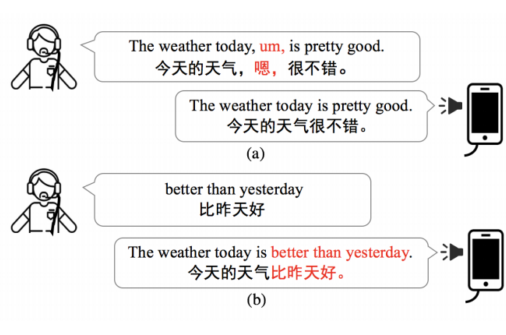

Just Speak It: Minimize Cognitive Load for Eyes-Free Text Editing with a Smart Voice Assistant

Abstract

Entering text precisely by voice, users might encounter colloquial inserts, inappropriate wording, and recognition errors, which brings difculties to voice editing. Users need to locate the errors and then correct them. In eyes-free scenarios, this select-modify mode brings a cognitive burden and a risk of error. This paper introduces neural networks and pre-trained models to understand users’ revision intention based on semantics, reducing the need for the information from users’ statements. We present two strategies. One is to remove the colloquial inserts automatically. The other is to allow users to edit by just speaking out the target words without having to say the context and the incorrect text. Accordingly, our approach can predict whetherto insert orreplace, the incorrect text to replace, and the position to insert. We implement these strategies in SmartEdit, an eyes-free voice input agent controlled with earphone buttons. The evaluation shows that our techniques reduce the cognitive load and decrease the average failure rate by 54.1% compared to descriptive command or re-speaking.

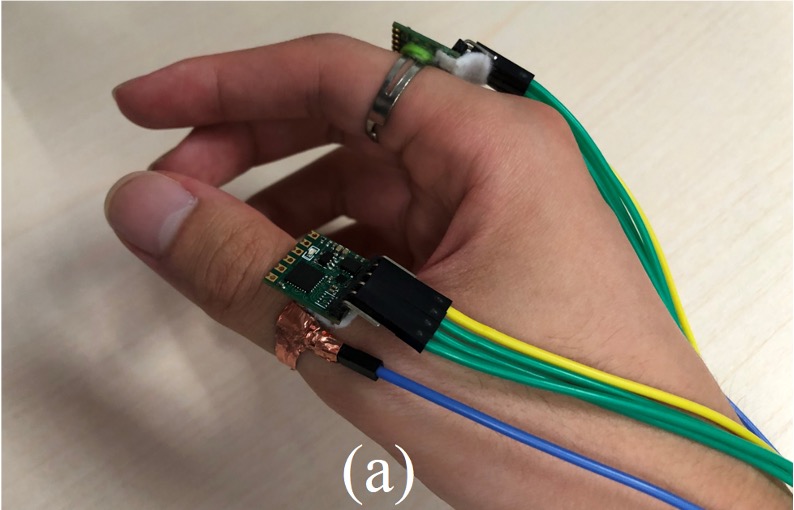

DualRing: Enabling Subtle and Expressive Hand Interaction with Dual IMU Rings

Abstract

We present DualRing, a novel ring-form input device that can capture the state and movement of the user’s hand and fingers.

With two IMU rings attached to the user’s thumb and index finger, DualRing can sense not only the absolute hand gesture relative to the ground but also the relative pose and movement among hand segments. To enable natural thumb-to-finger interaction, we develop a high-frequency AC circuit for on-body contact detection. Based on the sensing information of DualRing, we outline the interaction space and divide it into three sub-spaces: within-hand interaction, hand-to-surface interaction, and hand-to-object interaction. By analyzing the accuracy and performance of our system, we demonstrate the informational advantage of DualRing in sensing comprehensive hand gestures compared with single-ring-based solutions. Through the user study, we discovered the interaction space enabled by DualRing is favored by users for its usability, efficiency, and novelty.