论文成果 / Publications

2021

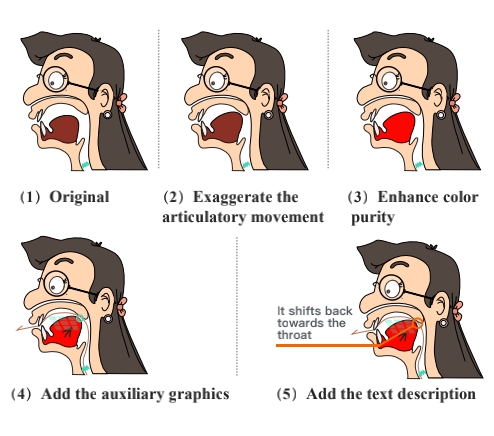

PTeacher: a Computer-Aided Personalized Pronunciation Training System with Exaggerated Audio-Visual Corrective Feedback

Abstract

Second language (L2) English learners often find it difficult to improve their pronunciations due to the lack of expressive and personalized corrective feedback.We present a Computer-Aided Pronunciation Training system that provides personalized exaggerated audio-visual corrective feedback for mispronunciations to realize the appropriate degrees of audio and visual exaggeration when it comes to individual learners. Therefore, three critical metrics are proposed for both 100 learners and 22 teachers to help us identify the appropriate degrees of exaggeration. User studies demonstrate that our system rectify mispronunciations in a more discriminative, understandable and perceptible manner.

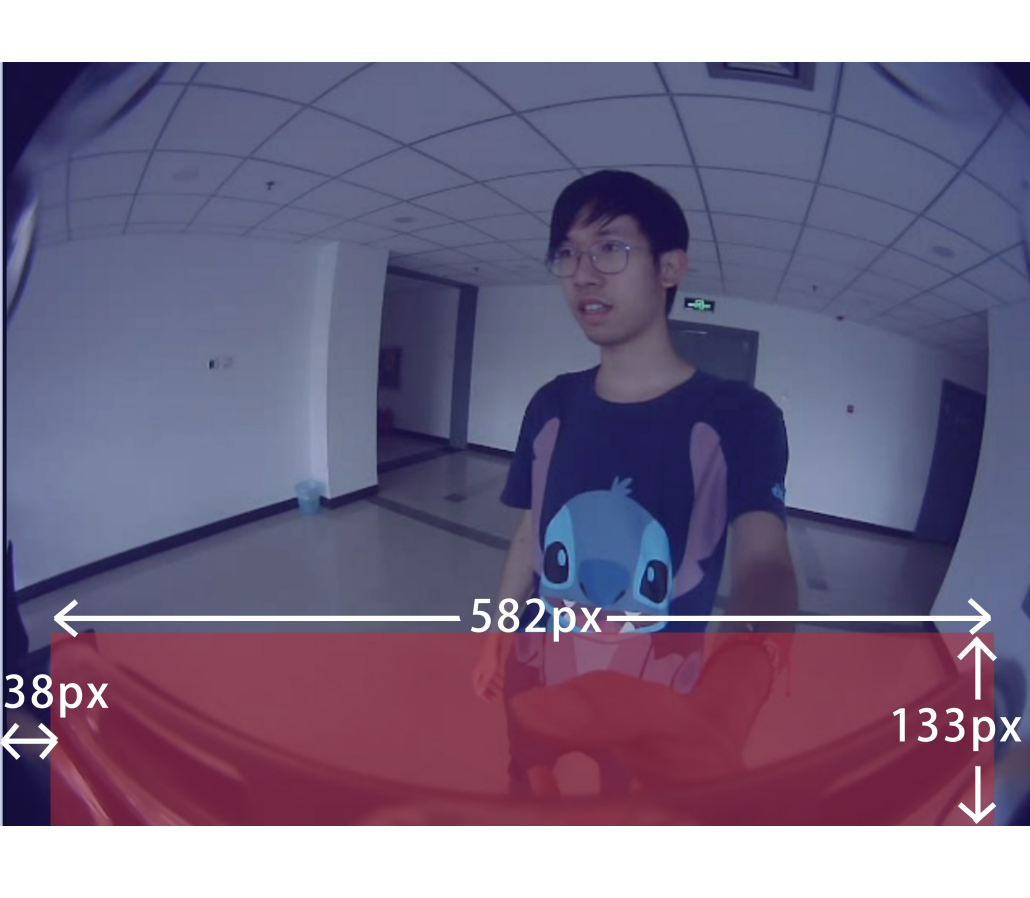

Auth+Track: Enabling Authentication Free Interaction on Smartphone by Continuous User Tracking

Abstract

We propose Auth+Track, a novel authentication model that aims to reduce redundant authentication in everyday smartphone usage. To instantiate the Auth+Track model, we present PanoTrack, a prototype that integrates body and near field hand information for user tracking. We install a fisheye camera on the top of the phone to achieve a panoramic vision that can capture both user's body and on-screen hands. Based on the captured video stream, we develop an algorithm to extract 1) features for user tracking, including body keypoints and their temporal and spatial association, near field hand status, and 2) features for user identity assignment.

HulaMove: Using Commodity IMU for Waist Interaction

Abstract

We present HulaMove, a novel interaction technique that leverages the movement of the waist as a new eyes-free and hands-free input method. We first conducted a study to understand users’ ability to control their waist. We found that users could easily discriminate eight shifting directions and two rotating orientations. We developed a design space with eight gestures. Using a hierarchical machine learning model, our real-time system could recognize gestures at an accuracy of 97.5%. Finally, we conducted a second user study for usability testing in both real-world scenarios and VR settings. Our study indicated that HulaMove significantly reduced interaction time by 41.8%, and greatly improved users’ sense of presence in the virtual world.

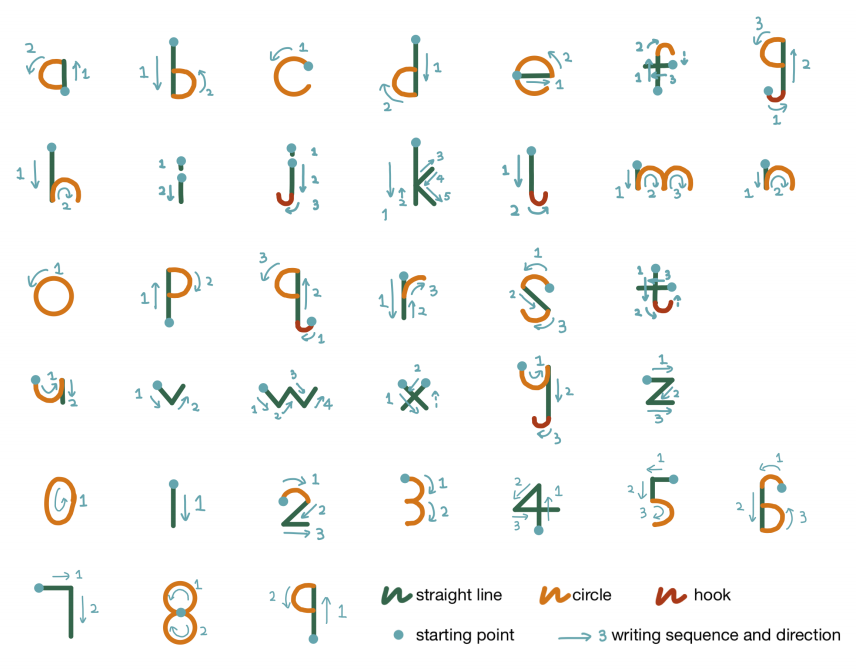

LightWrite: Teach Handwriting to The Visually Impaired with A Smartphone

Abstract

Learning to write is challenging for blind and low vision (BLV) people. We propose LightWrite, a low-cost, easy-to-access smartphone application that uses voice-based descriptive instruction and feedback to teach BLV users to write English lowercase letters and Arabian digits in a specifically designed font. A two-stage study with 15 BLV users with little prior writing knowledge shows that LightWrite can successfully teach users to learn handwriting characters in an average of 1.09 minutes for each letter. After initial training and 20-minute daily practice for 5 days, participants were able to write an average of 19.9 out of 26 letters that are recognizable by sighted raters.

2020

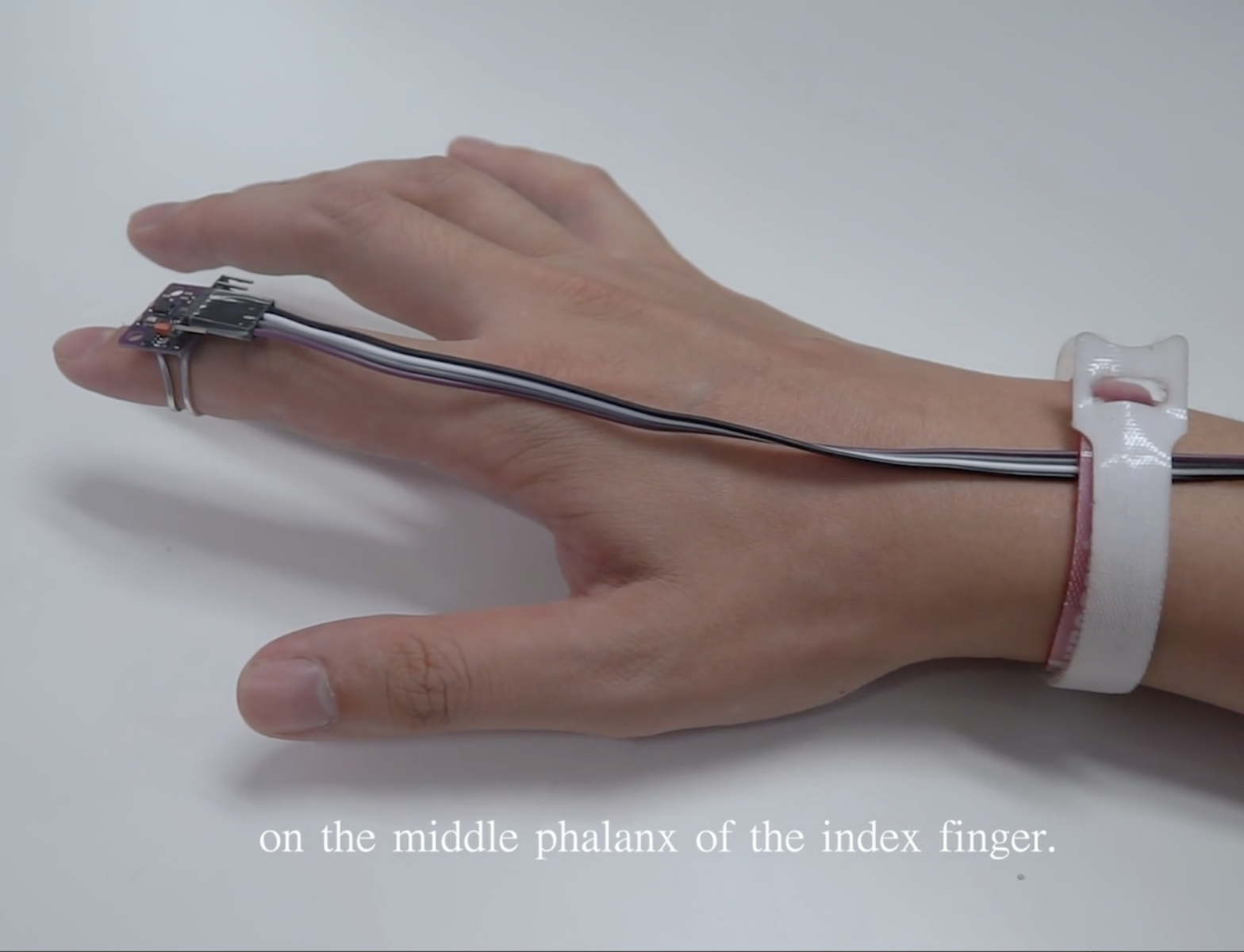

QwertyRing: Text Entry on Physical Surfaces Using a Ring

Abstract

The software keyboard is widely used on digital devices such as smartphones, computers, and tablets. The software keyboard operates via touch, which is efficient, convenient, and familiar to users. However, some emerging technology devices such as AR/VR headsets and smart TVs do not support touch-based text entry. In this paper, we present QwertyRing, a technique that supports text entry on physical surfaces using an IMU ring. Evaluation shows that users can type 20.59 words per minute after a five-day training.

Virtual Paving: Rendering a Smooth Path for People with Visual Impairment through Vibrotactile and Audio Feedback

Abstract

We propose Virtual Paving, which aims to assist independent navigation by rendering a smooth path to visually impaired people through multi-modal feedback. This work focuses on the feedback design of Virtual Paving. Firstly, we extracted the design guidelines based on an investigation into visually impaired people’s current mobility practices. Next, we developed a multi-modal solution through co-design and evaluation with visually impaired users. This solution included (1) vibrotactile feedback on the shoulders and waist to give directional cues and (2) audio feedback to describe road conditions ahead of the user. Guided by this solution, 16 visually impaired participants successfully completed 127 out of 128 trials with 2.1m-wide basic paths. Subjective feedback indicated that our solution to render Virtual Paving was easy for users to learn, and it also enabled them to walk smoothly.

EarBuddy: Enabling On-Face Interaction via Wireless Earbuds

Abstract

We propose to use EarBuddy regarding on-body interaction, a real-time system that leverages the microphone in commercial wireless earbuds to detect tapping and sliding gestures near the face and ears. We develop a design space to generate 27 valid gestures and select the eight gestures that were optimal for both human preference and microphone detectability. We collected a dataset on those eight gestures (N=20) and trained deep learning models for gesture detection and classification. Our optimized classifier achieved an accuracy of 95.3%. Finally, we evaluate EarBuddy's usability. Our results show that EarBuddy can facilitate novel interaction and provide a new eyes-free, socially acceptable input method that is compatible with commercial wireless earbuds and has the potential for scalability and generalizability.

PalmBoard: Leveraging Implicit Touch Pressure in Statistical Decoding for Indirect Text Entry

Abstract

We investigated how to incorporate implicit touch pressure, finger pressure applied to a touch surface during typing, to improve text entry performance via statistical decoding. We focused on one-handed touch-typing on indirect interface as an example scenario?collected typing data on a pressure-sensitive touchpad, and analyzed users' typing behavior.Our investigation revealed distinct pressure patterns for different keys and led to a Markov-Bayesian decoder incorporating pressure image data into decoding. It improved the top-1 accuracy from 53% to 74% over a naive Bayesian decoder. We then implemented PalmBoard, a text entry method that implemented the Markov-Bayesian decoder and effectively supported one-handed touch-typing on indirect interfaces.Overall, our investigation showed that incorporating implicit touch pressure is effective in improving text entry decoding.

Understanding Window Management Interactions in AR Headset + Smartphone Interface

Abstract

We envision a combinative use of an AR headset and a smartphone in the future that can provide a more extensive display and precise touch input simultaneously. In this way, the input/output interface of these two devices can fuse to redefine how a user can manage application windows seamlessly on the two devices. In this work, we conducted a formative interview with ten people to provide an understanding of how users would prefer to manage multiple windows on the fused interface. Our interview highlighted that the desire to use a smartphone as a window management interface shaped users' interaction practices of window management operations. This paper reports how their desire to use a smartphone as a window manager is manifested.