论文成果 / Publications

2021

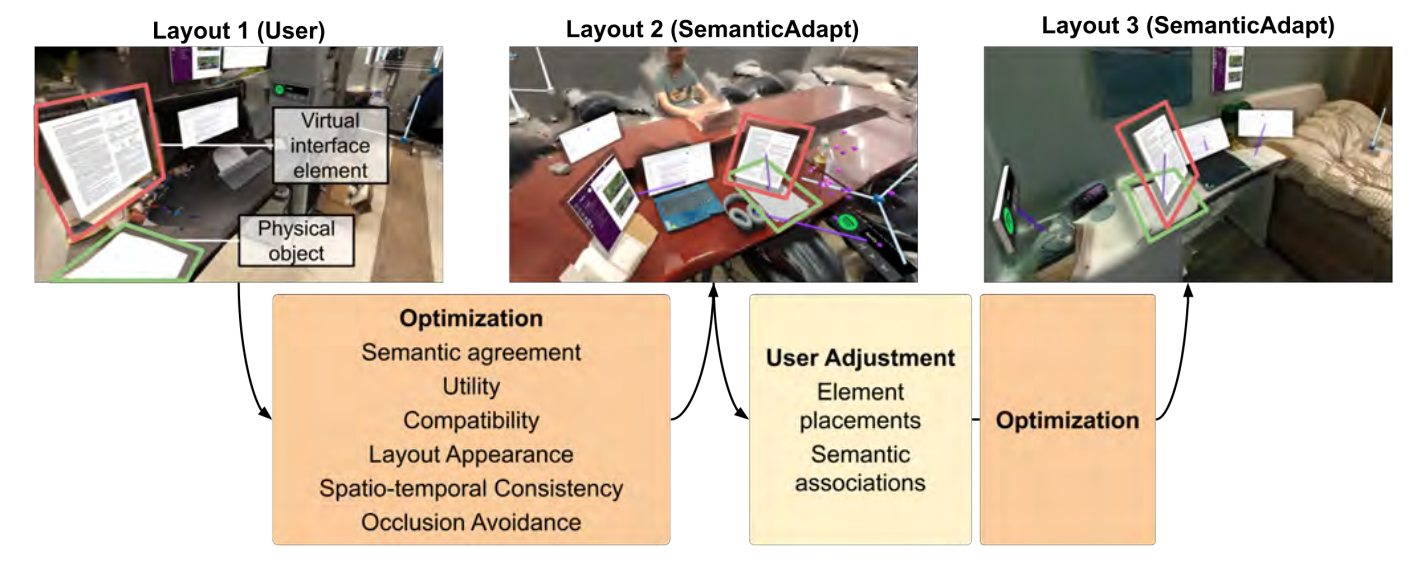

SemanticAdapt: Optimization-based Adaptation of Mixed Reality Layouts Leveraging Virtual-Physical Semantic Connections

Abstract

We present an optimization-based approach that automatically adapts Mixed Reality (MR) interfaces to different physical environments. Current MR layouts, including the position and scale of virtual interface elements, need to be manually adapted by users whenever they move between environments, and whenever they switch tasks. This process is tedious and time consuming, and arguably needs to be automated for MR systems to be beneficial for end users. We contribute an approach that formulates this challenge as a combinatorial optimization problem and automatically decides the placement of virtual interface elements in new environments. To achieve this, we exploit the semantic association between the virtual interface elements and physical objects in an environment. Our optimization furthermore considers the utility of elements for users' current task, layout factors, and spatio-temporal consistency to previous layouts. All thase factors are combined in a single linear program, which is used to adapt the layout of MR interfaces in real time. We demonstrate a set of application scenarios, showcasing the versatility and applicability of our approach.Finally, we show that compared to a naive adaptive baseline approach that does not take semantic associations into account, our approach decreased the number of manual interface adaptations by 33%.

Understanding the Design Space of Mouth Microgestures

Abstract

As wearable devices move toward the face (i.e. smart earbuds, glasses), there is an increasing need to facilitate intuitive inter- actions with these devices. Current sensing techniques can already detect many mouth-based gestures; however, users’ preferences of these gestures are not fully understood. In this paper, we investi- gate the design space and usability of mouth-based microgestures. We first conducted brainstorming sessions (N=16) and compiled an extensive set of 86 user-defined gestures. Then, with an online survey (N=50), we assessed the physical and mental demand of our gesture set and identified a subset of 14 gestures that can be performed easily and naturally. Finally, we conducted a remote Wizard-of-Oz usability study (N=11) mapping gestures to various daily smartphone operations under a sitting and walking context. From these studies, we develop a taxonomy for mouth gestures, finalize a practical gesture set for common applications, and provide design guidelines for future mouth-based gesture interactions.

LightGuide: Directing Visually Impaired People along a Path Using Light Cues

Abstract

This work presents LightGuide, a directional feedback solution that indicates a safe direction of travel via the position of a light within the user’s visual field. We prototyped LightGuide using an LED strip attached to the brim of a cap, and conducted three user studies to explore the effectiveness of LightGuide compared to HapticBag, a state-of-the-art baseline solution that indicates directions through on-shoulder vibrations. Results showed that, with LightGuide, participants turned to target directions in place more quickly and smoothly, and navigated along basic and complex paths more efficiently, smoothly, and accurately than HapticBag. Users’ subjective feedback implied that LightGuide was easy to learn and intuitive to use.

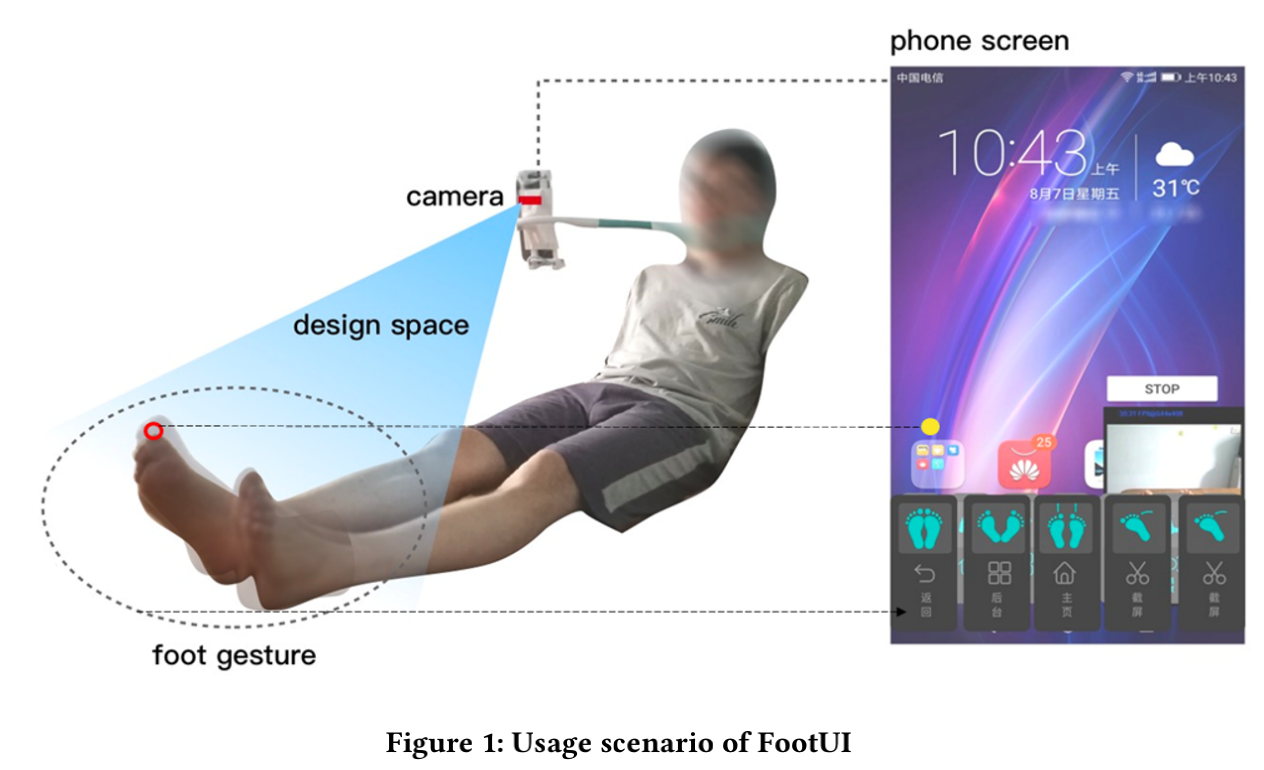

FootUI: Assisting People with Upper Body Motor Impairments to Use Smartphones with Foot Gestures on the Bed

Abstract

Some people with upper body motor impairments but sound lower limbs usually use feet to interact with smartphones. However, touching the touchscreen with big toes is tiring, ineffcient and easy to mistouch. In this paper, we propose FootUI, which leverages the

phone camera to track users’ feet and translates the foot gestures to smartphone operations. This technique enables users to interact with smartphones while reclining on the bed and improves the comfort of users. We explore the usage scenario and foot gestures, define the mapping from foot gestures to smartphone operations and develop the prototype on smartphones, which includes the gesture tracking and recognition algorithm. and the user interface. Evaluation results show that FootU is easy, efficient and interesting to use. Our work provides a novel input technique for people with upper body motor impairments but sound lower limbs.

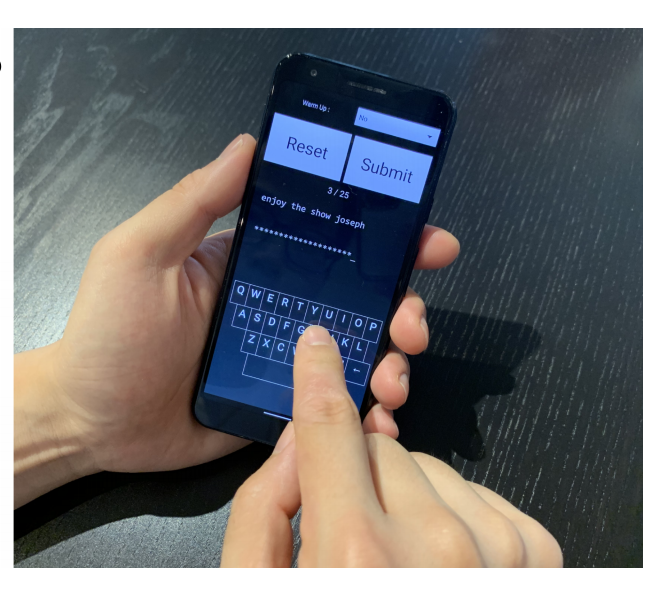

Facilitating Text Entry on Smartphones with QWERTY Keyboard for Users with Parkinson’s Disease

Abstract

QWERTY is the primary smartphone text input keyboard configuration. However, insertion and substitution errors caused by hand tremors, often experienced by users with Parkinson’s disease, can severely affect typing efficiency and user experience. In this paper, we investigated Parkinson’s users’ typing behavior on smartphones. In particular, we identified and compared the typing characteristics generated by users with and without Parkinson’s symptoms. We then proposed an elastic probabilistic model for input prediction. By incorporating both spatial and temporal features, this model generalized the classical statistical decoding algorithm to correct in sertion, substitution and omission errors, while maintaining direct physical interpretation.

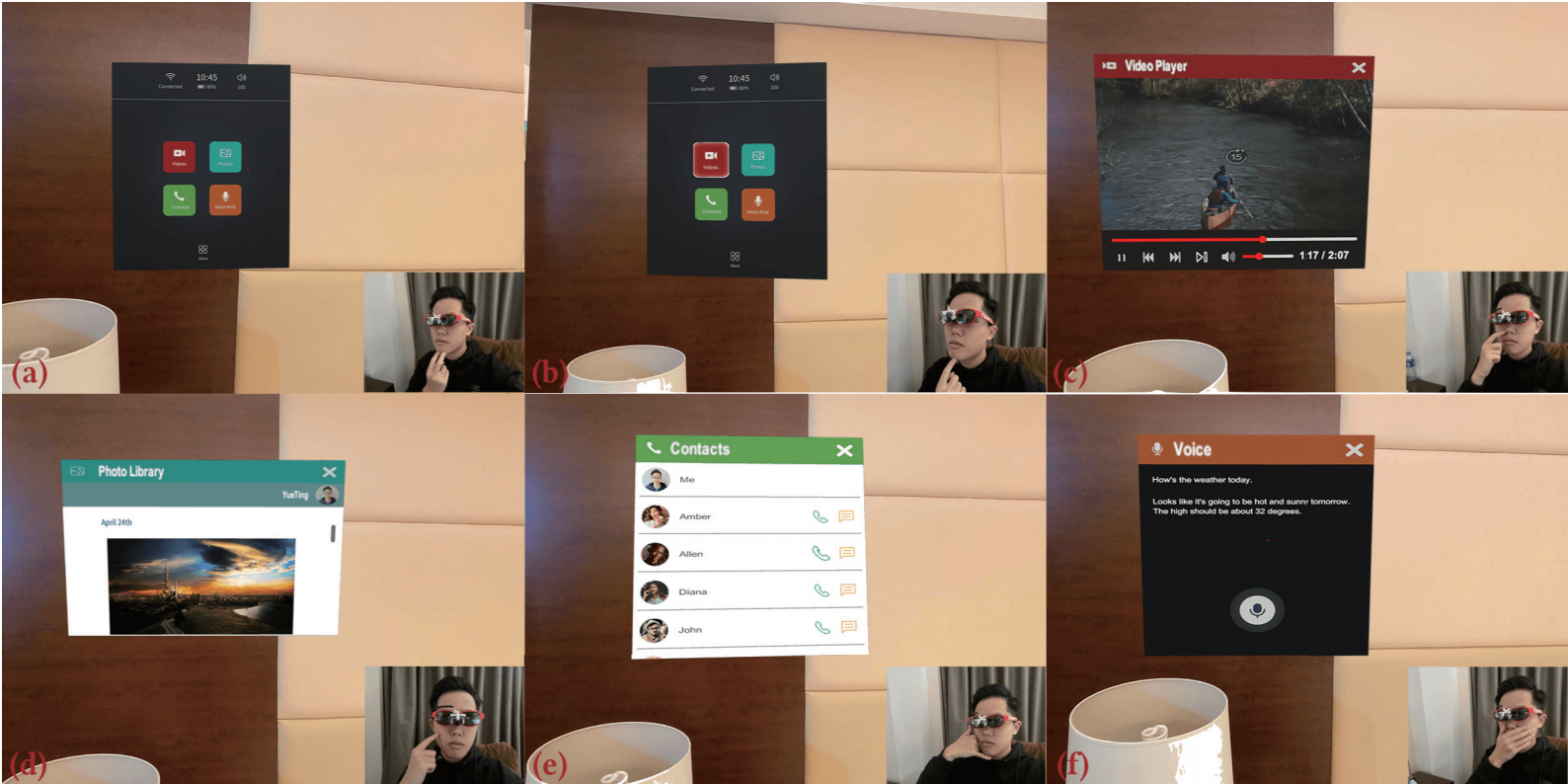

FaceSight: Enabling Hand-to-Face Gesture Interaction on AR Glasses with a Downward-Facing Camera Vision

Abstract

We present FaceSight, a computer vision-based hand-to-face gesture sensing technique for AR glasses. FaceSight fixes an infrared camera onto the bridge of AR glasses to provide extra sensing capability of the lower face and hand behaviors. We obtained 21 hand-to-face gestures and demonstrated the potential interaction benefits through five AR applications. We designed and implemented an algorithm pipeline that achieves classification accuracy of all gestures at 83.06%, proved by the data of 10 users. Due to the compact form factor and rich gestures, we recognize FaceSight as a practical solution to augment input capability of AR glasses in the future.

Revamp: Enhancing Accessible Information Seeking Experience of Online Shopping for Blind or Low Vision Users

Abstract

Online shopping has become a valuable modern convenience, but blind or low vision (BLV) users still face significant challenges using it. We propose Revamp, a system that leverages customer reviews for interactive information retrieval. Revamp is a browser integration that supports review-based question-answering interactions on a reconstructed product page. From our interview, we identified four main aspects (color, logo, shape, and size) that are vital for BLV users to understand the visual appearance of a product. Based on the findings, we formulated syntactic rules to extract review snippets, which were used to generate image descriptions and responses to users’ queries.

ProxiMic: Convenient Voice Activation via Close-to-Mic Speech Detected by a Single Microphone

Abstract

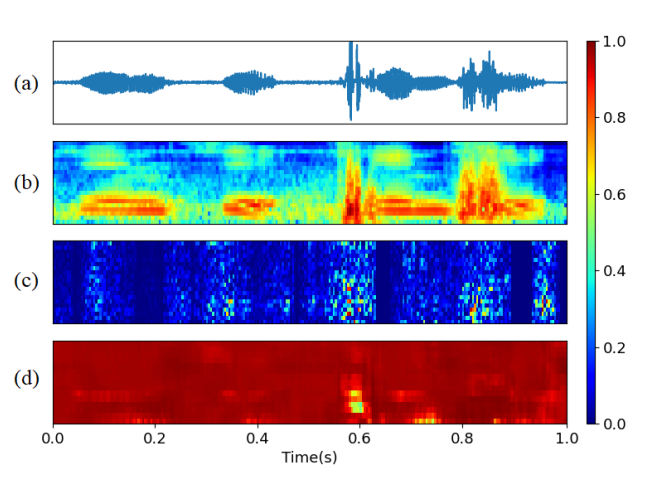

Wake-up-free techniques (e.g., Raise-to-Speak) are important for improving the voice input experience. We present ProxiMic, a closeto-mic (within 5 cm) speech sensing technique using only one microphone. With ProxiMic, a user keeps a microphone-embedded device close to the mouth and speaks directly to the device without wake-up phrases or button presses. To detect close-to-mic speech, we use the feature from pop noise observed when a user speaks and blows air onto the microphone. Sound input is first passed through a low-pass adaptive threshold filter, then analyzed by a CNN which detects subtle close-to-mic features (mainly pop noise). Our two-stage algorithm can achieve 94.1% activation recall, 12.3 False Accepts per Week per User (FAWU) with 68 KB memory size, which can run at 352 fps on the smartphone. The user study shows that ProxiMic is eficient, user-friendly, and practical.

Tactile Compass: Enabling Visually Impaired People to Follow a Path with Continuous Directional Feedback

Abstract

Accurate and efective directional feedback is crucial for an electronic traveling aid device that guides visually impaired people in walking through paths. This paper presents Tactile Compass, a hand-held device that provides continuous directional feedback with a rotatable needle pointing toward the planned direction. We conducted two lab studies to evaluate the efectiveness of the feedback solution. Results showed that, using Tactile Compass, participants could reach the target direction in place with a mean deviation of 3.03° and could smoothly navigate along paths of 60cm width, with a mean deviation from the centerline of 12.1cm. Subjective feedback showed that Tactile Compass was easy to learn and use.