论文成果 / Publications

2019

VIPBoard: Improving Screen-Reader Keyboard for Visually Impaired People with Character-Level Auto Correction

Abstract

Modern touchscreen keyboards are all powered by the word- level auto-correction ability to handle input errors. Unfortu- nately, visually impaired users are deprived of such beneft because a screen-reader keyboard ofers only character-level input and provides no correction ability. In this paper, we present VIPBoard, a smart keyboard for visually impaired people, which aims at improving the underlying keyboard al- gorithm without altering the current input interaction. Upon each tap, VIPBoard predicts the probability of each key con- sidering both touch location and language model, and reads the most likely key, which saves the calibration time when the touchdown point misses the target key. Meanwhile, the keyboard layout automatically scales according to users’ touch point location, which enables them to select other keys easily. A user study shows that compared with the cur- rent keyboard technique, VIPBoard can reduce touch error rate by 63.0% and increase text entry speed by 12.6%.

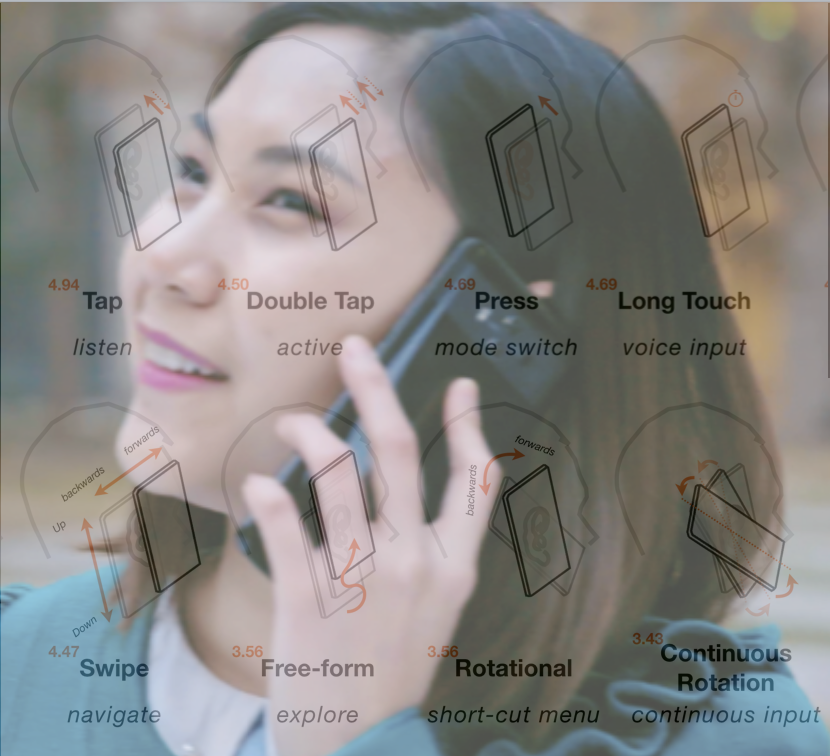

EarTouch: Facilitating Smartphone Use for Visually Impaired People in Mobile and Public Scenarios

Abstract

Interacting with a smartphone using touch input and speech output is challenging for blind and visually impaired people in mobile and public scenarios, where only one hand may be available for input ( e.g., while holding a cane ) and using the loud speaker for speech output is constrained by environmental noise, privacy, and social concerns. To address these issues, we propose EarTouch, a one-handed interaction technique that allows users to interact with a smartphone using the ear to tap or draw gestures on the touchscreen and hear the speech output played via the ear speaker privately. In a broader sense, EarTouch brings us an important step closer to accessible smartphones for all users of all abilities.

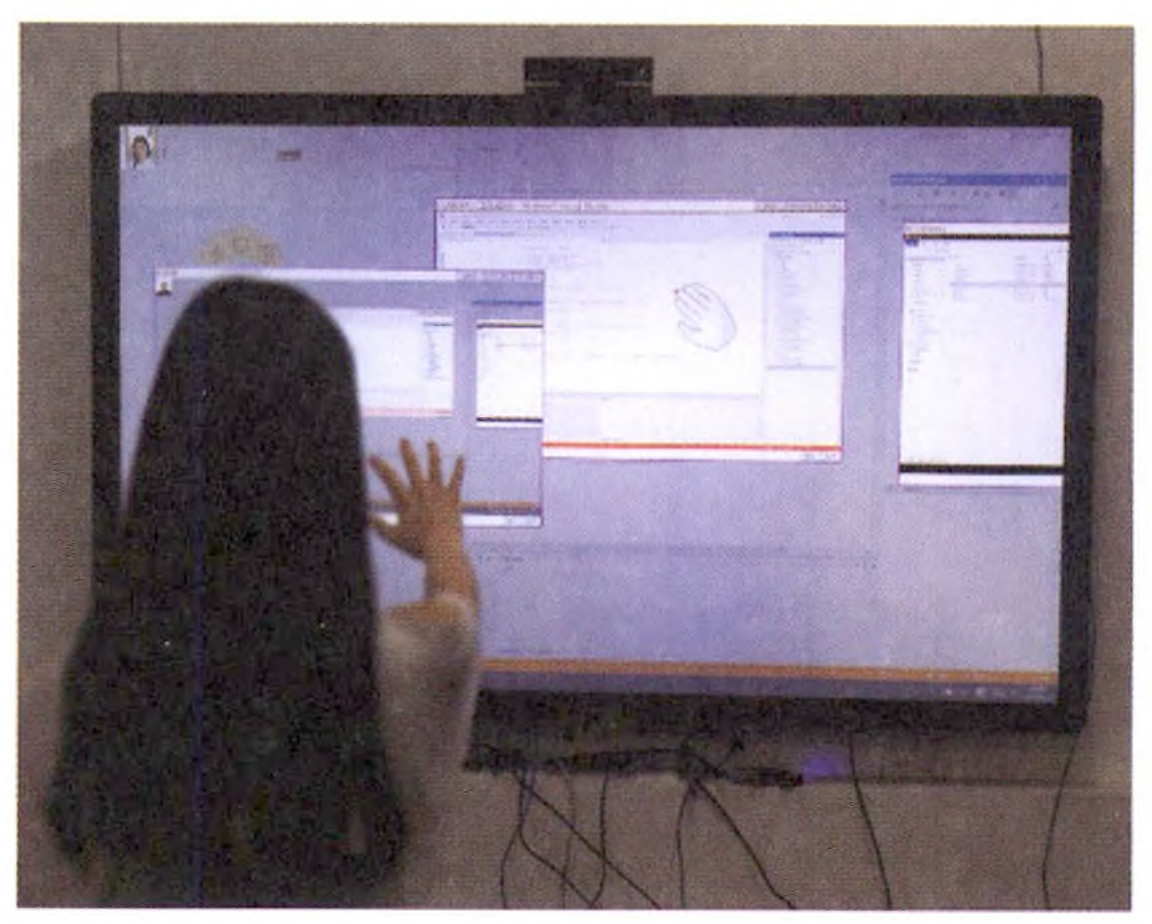

软件学报|基于空中手势的跨屏幕内容分享技术研究

Abstract

在多屏幕环境中,实现跨屏幕内容分享的现有技术忽视了对空中手势的利用.为验证空中手势,实现并部署了一套跨屏幕界面共享系统,支持使用空中手势完成屏幕间界面内容的实时分享.根据一项让用户设计手势的调研,实现了"抓-拖拽"和"抓-拉-放"两种空中手势,通过对两种手势进行用户实验发现∶用空中手势完成跨屏幕内容分享是新颖、有用、易于掌握的.总体上,"抓-拖拽"手势用户感受更好,但"抓-拉-放"手势对于复杂情景的通用性更好;前者更适合近屏场景,推荐在屏幕相邻且间距不大、操作距离近时使用;后者更适合远屏场景,推荐在目标屏幕间有间隔屏幕或相距较远时使用,因此,将两种手势相结合使用更为合理.

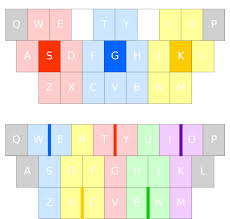

Exploring Low-Occlusion Qwerty Soft Keyboard Using Spatial Landmarks

Abstract

The Qwerty soft keyboard is widely used on mobile devices. However, keyboards often consume a large por- tion of the touchscreen space, occluding the application view on the smartphone and requiring a separate input interface on the smartwatch. Such space consumption can affect the user experience of accessing infor- mation and the overall performance of text input. In order to free up the screen real estate, this article explores the concept of Sparse Keyboard and proposes two new ways of presenting the Qwerty soft keyboard. The idea is to use users’ spatial memory and the reference effect of spatial landmarks on the graphical interface. Our final design K3-SGK displays only three keys while L5-EYOCN displays only five line segments instead of the entire Qwerty layout.

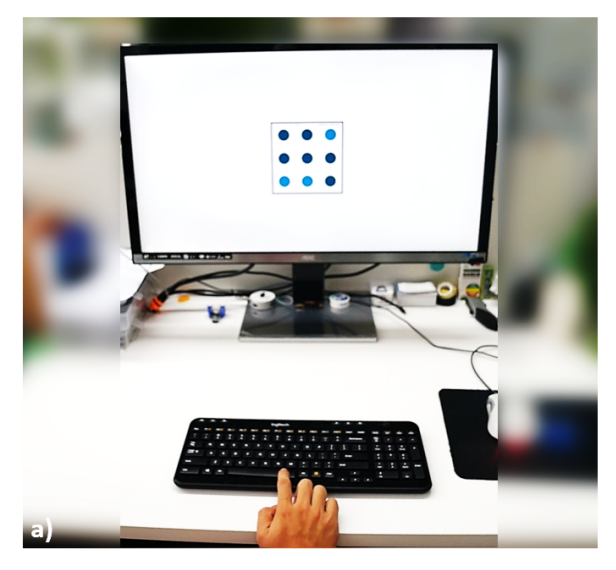

Facilitating Temporal Synchronous Target Selection through User Behavior Modeling

Abstract

Temporal synchronous target selection is an association-free selection technique: users select a target by generating signals (e.g., finger taps and hand claps) in sync with its unique temporal pattern. However, classical pattern set design and input recognition algorithm of such techniques did not leverage users’ behavioral information, which limits their robustness to imprecise inputs. In this paper, we improve these two key components by modeling users’ interaction behavior. We generated pattern sets for up to 22 targets that minimized the possibility of confusion due to imprecise inputs, validated that the optimized pattern sets could reduce error rate from 23% to 7% for the classical Correlation recognizer. We also tested a novel Bayesian, which achieved higher selection accuracy than the Correlation recognizer when the input sequence is short.

AR Assistive System in Domestic EnvironmentUsing HMDs: Comparing Visualand Aural Instructions

Abstract

Household appliances are becoming more varied. In daily life, people usually refer to printed documents while they learn to use different devices. However, augmented reality (AR) assistive systems providing visual and aural instructions have been proposed as an alternative solution. In this work, we evaluated users’ performance of instruction understanding in four different ways: (1) Baseline paper instructions, (2) Visual instructions based on head mounted displays (HMDs), (3) Visual instructions based on computer monitor, (4) Aural instructions. In a Wizard of Oz study, we found that, for the task of making espresso coffee, the helpfulness of visual and aural instructions depends on task complexity. Providing visual instructions is a better way of showing operation details, while aural instructions are suitable for presenting intention of operation. With the same visual instructions on displays, due to the limitation of hardware, the HMD-users complete the task in the longest duration and bear the heaviest perceived cognitive load.

2018

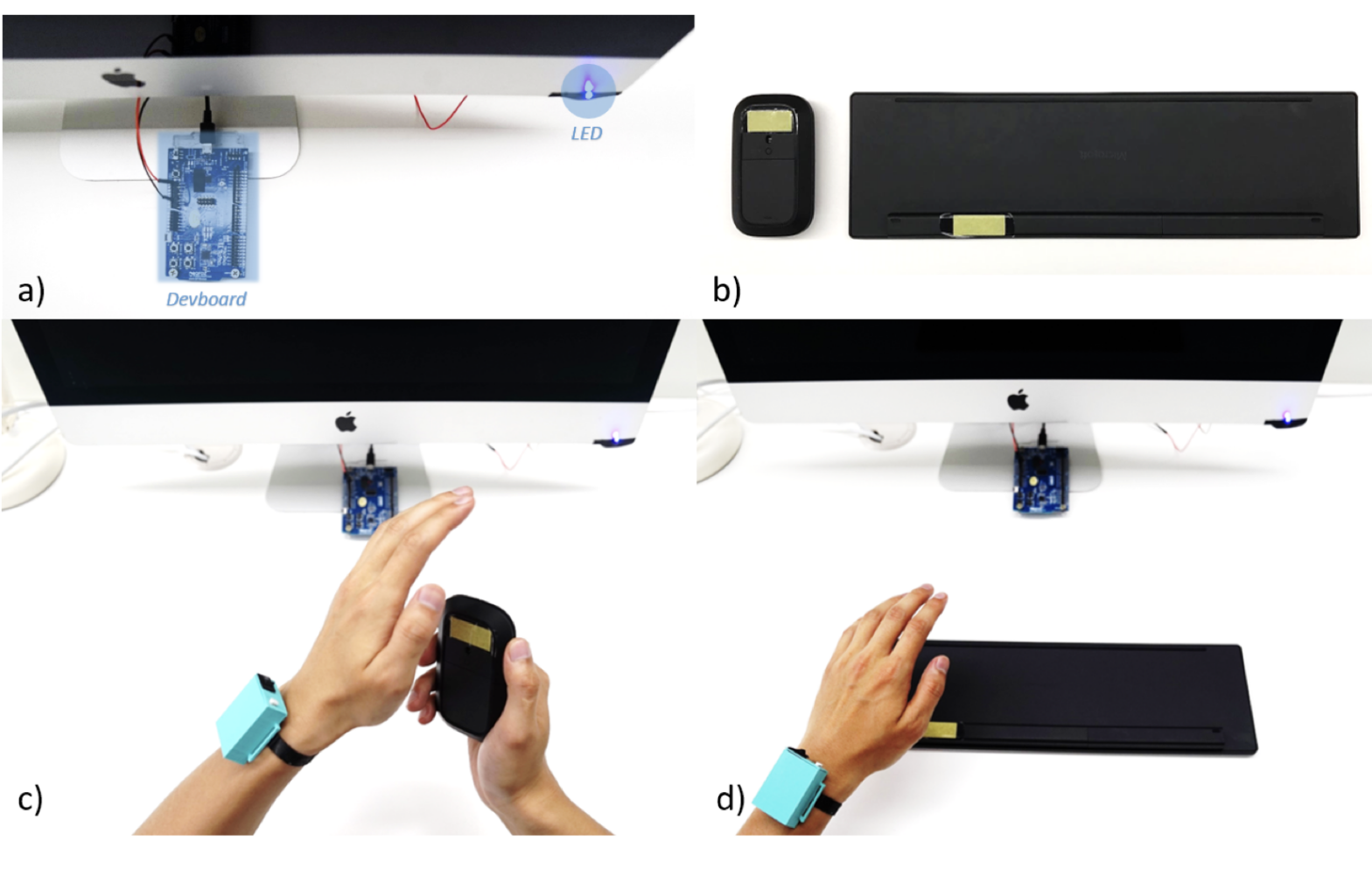

Tap-to-Pair: Associating Wireless Devices with Synchronous Tapping

Abstract

Tap-to-Pair is a spontaneous device association technique that initiates pairing from advertising devices without hardware or firmware modifications. Tapping an area near the advertising device's antenna can change its signal strength. Users can then associate two devices by synchronizing taps on the advertising device with the blinking pattern displayed by the scanning device. By leveraging the wireless transceiver for sensing, Tap-to-Pair does not require additional resources from advertising devices and needs only a binary display (e.g. LED) on scanning devices.

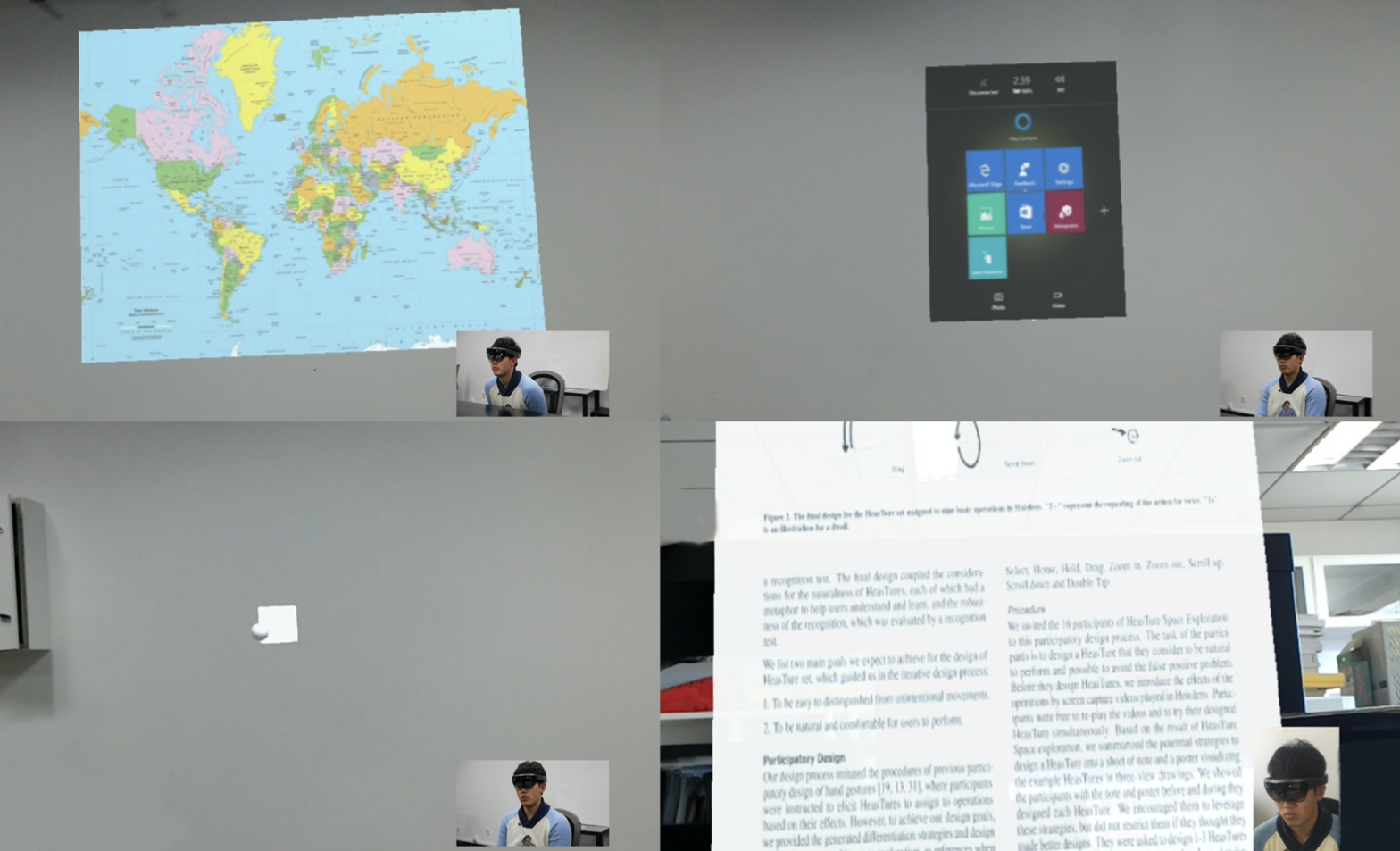

HeadGesture: Hands-Free Input Approach Leveraging Head Movements for HMD Devices

Abstract

We propose HeadGesture, a hands-free input approach to interact with HMD devices. Using HeadGesture, users do not need to raise their arms to perform gestures or operate remote controllers in the air. Instead, they perform simple gestures with head movement to interact. In this way, users' hands are free to perform other tasks and it reduces the hand occlusion of the field of view and alleviates arm fatigue. Evaluation results demonstrate that the performance of HeadGesture is comparable to mid-air hand gestures and users feel significantly less fatigue.

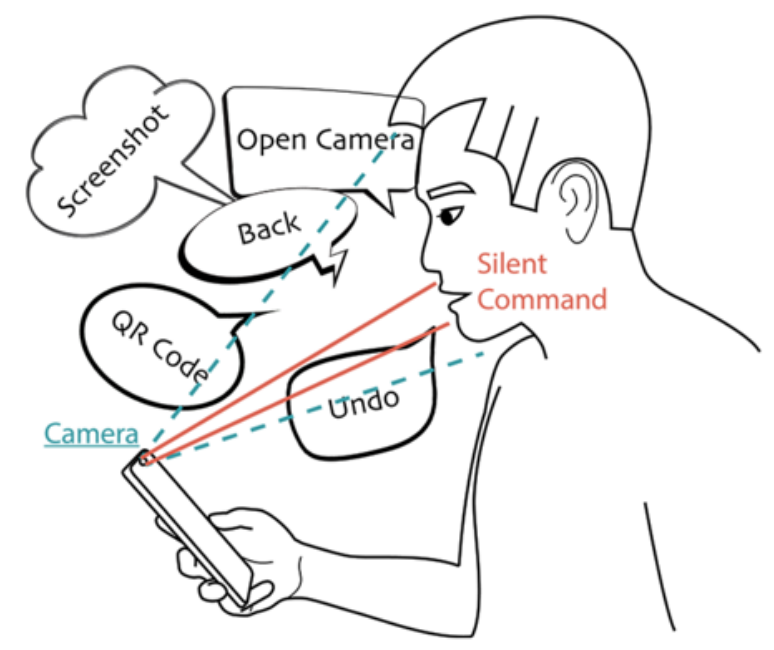

Lip-Interact: Improving Mobile Device Interaction with Silent Speech Commands

Abstract

We present Lip-Interact, an interaction technique that allows users to issue commands on their smartphone through silent speech. Lip-Interact repurposes the front camera to capture the user's mouth movements and recognize the issued commands with an end-to-end deep learning model. Our system supports 44 commands for accessing both system-level functionalities (launching apps, changing system settings, and handling pop-up windows) and application-level functionalities (integrated operations for two apps). We verify the feasibility of Lip-Interact with three user experiments: evaluating the recognition accuracy, comparing with touch on input efficiency, and comparing with voiced commands with regards to personal privacy and social norms. We demonstrate that Lip-Interact can help users access functionality efficiently in one step, enable one-handed input when the other hand is occupied, and assist touch to make interactions more fluent.