论文成果 / Publications

2019

FlexTouch: Enabling Large-Scale Interaction Sensing Beyond Touchscreens Using Flexible and Conductive Materials

Abstract

In this paper, we present FlexTouch, a technique that enables large-scale interaction sensing beyond the spatial constraints of capacitive touchscreens using passive low-cost conductive materials. This is achieved by customizing 2D circuit-like patterns with an array of conductive strips that can be easily attached to the sensing nodes on the edge of the touchscreen. FlexTouch requires no hardware modification, and is compatible with various conductive materials (copper foil tape, silver nanoparticle ink, ITO frames, and carbon paint), as well as fabrication methods (cutting, coating, and ink-jet printing).

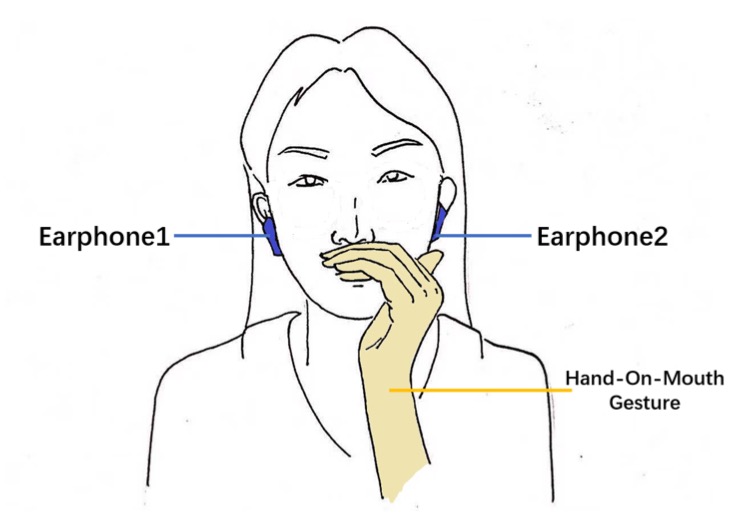

PrivateTalk: Activating Voice Input with Hand-On-Mouth Gesture Detected by Bluetooth Earphones

Abstract

We introduce PrivateTalk, an on-body interaction technique that allows users to activate voice input by performing the Hand-On-Mouth gesture during speaking. The gesture is per formed as a hand partially covering the mouth from one side. PrivateTalk provides two benefits simultaneously. First, it enhances privacy by reducing the spread of voice while also concealing the lip movements from the view of other people in the environment. Second, the simple gesture removes the need for speaking wake-up words and is more accessible than a physical/software button especially when the device is not in the user’s hands. To recognize the Hand-On-Mouth gesture, we propose a novel sensing technique that leverages the differ ence of signals received by two Bluetooth earphones worn on the left and right ear. Our evaluation shows that the gesture can be accurately detected and users consistently like PrivateTalk and consider it intuitive and effective.

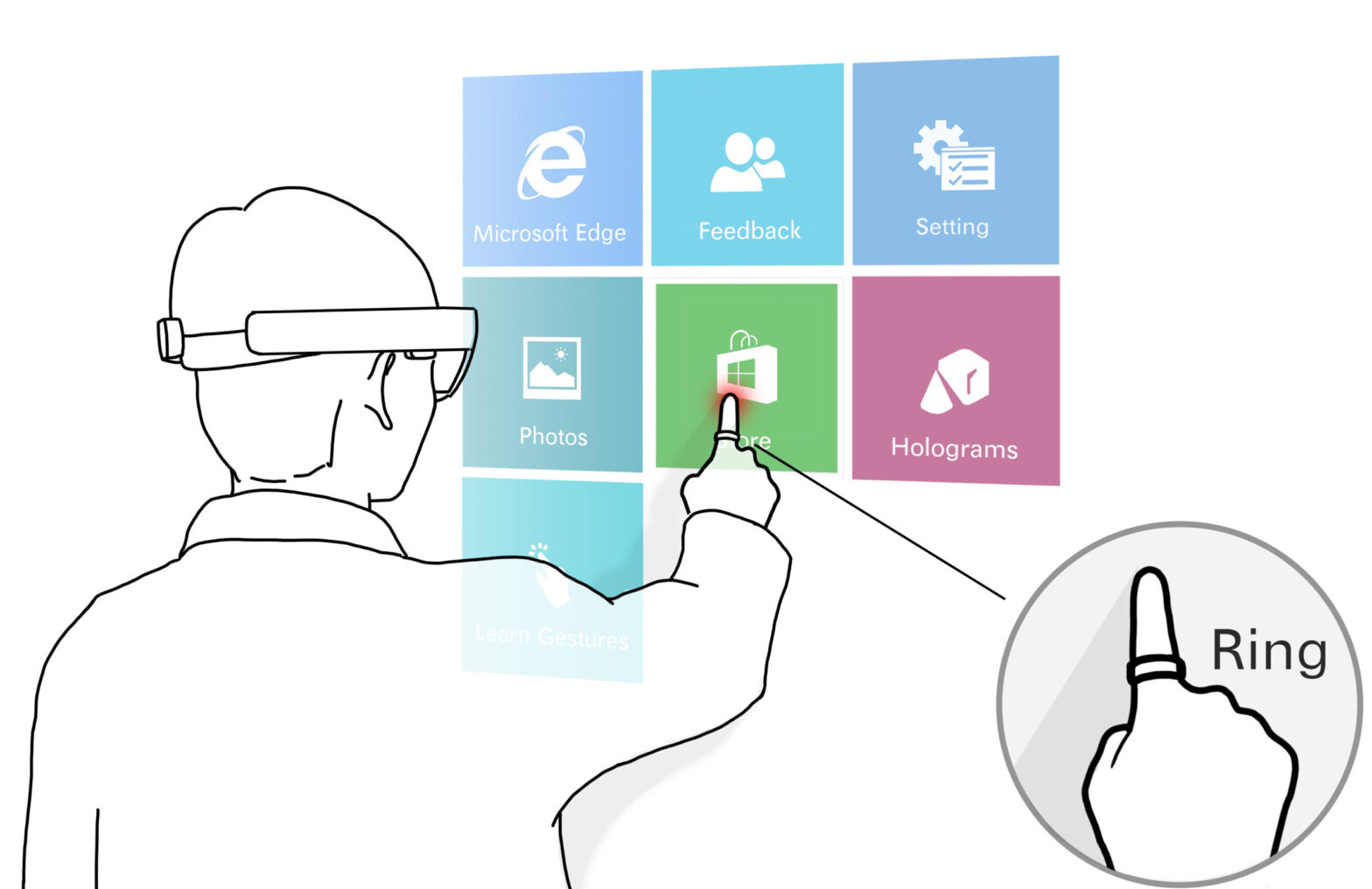

Accurate and Low-Latency Sensing of Touch Contact on Any Surface with Finger-Worn IMU Sensor

Abstract

Head-mounted Mixed Reality (MR) systems enable touch interaction on any physical surface. However, optical methods (i.e., with cameras on the headset) have difficulty in determining the touch contact accurately. We show that a finger ring with Inertial Measurement Unit (IMU) can substantially improve the accuracy of contact sensing from 84.74% to 98.61% (f1 score), with a low latency of 10 ms. We tested different ring wearing positions and tapping postures (e.g., with different fingers and parts). Results show that an IMU-based ring worn on the proximal phalanx of the index finger can accurately sense touch contact of most usable tapping postures. Participants preferred wearing a ring for better user experience. Our approach can be used in combination with the optical touch sensing to provide robust and low-latency contact detection.

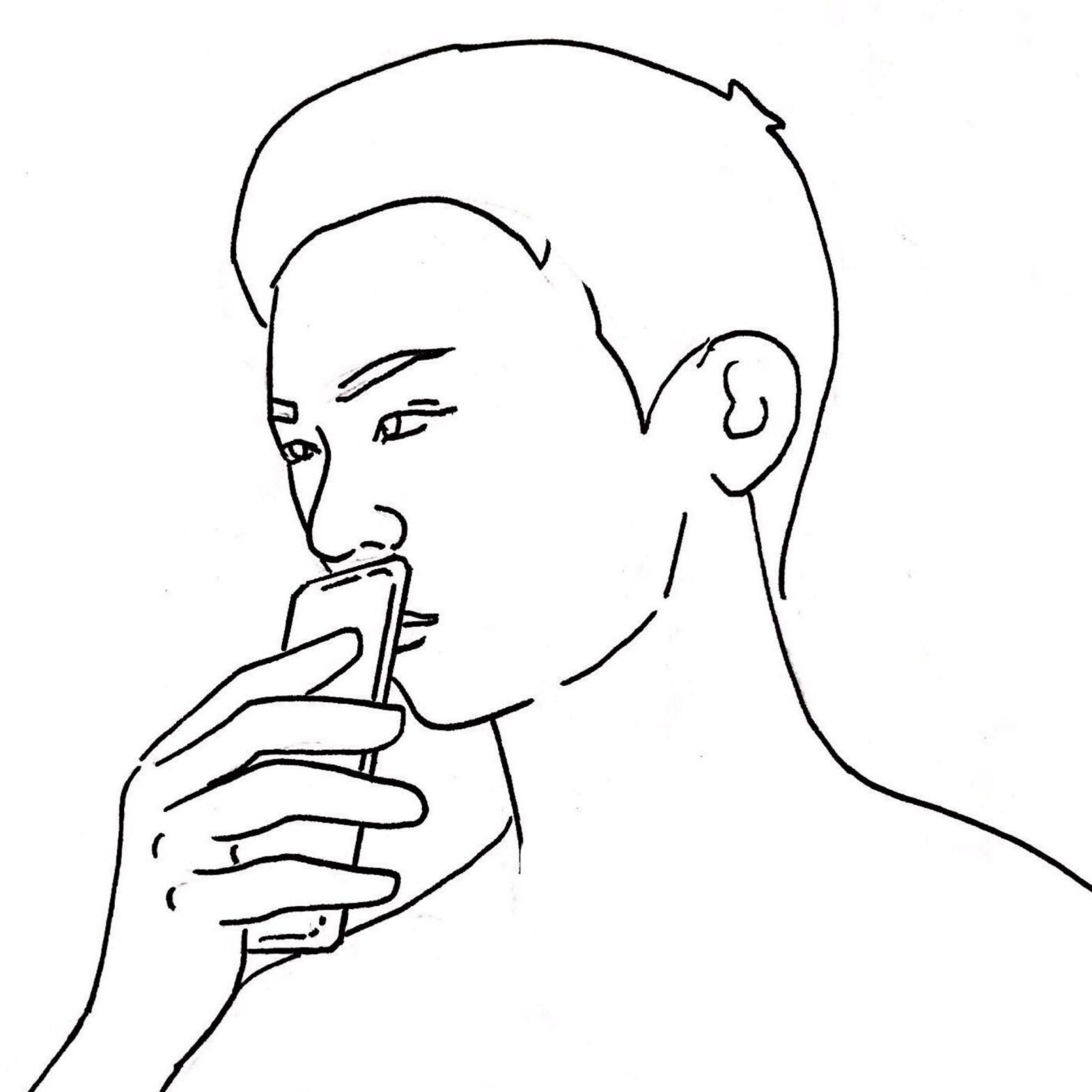

ProxiTalk: Activate Speech Input by Bringing Smartphone to the Mouth

Abstract

We present ProxiTalk, an interaction technique that allows users to enable smartphone speech input by simply moving it close to their mouths. We study how users use ProxiTalk and systematically investigate the recognition abilities of various data sources (e.g., using a front camera to detect facial features, using two microphones to estimate the distance between phone and mouth). Results show that it is feasible to utilize the smartphone’s built-in sensors and instruments to detect ProxiTalk use and classify gestures. An evaluation study shows that users can quickly acquire ProxiTalk and are willing to use it.

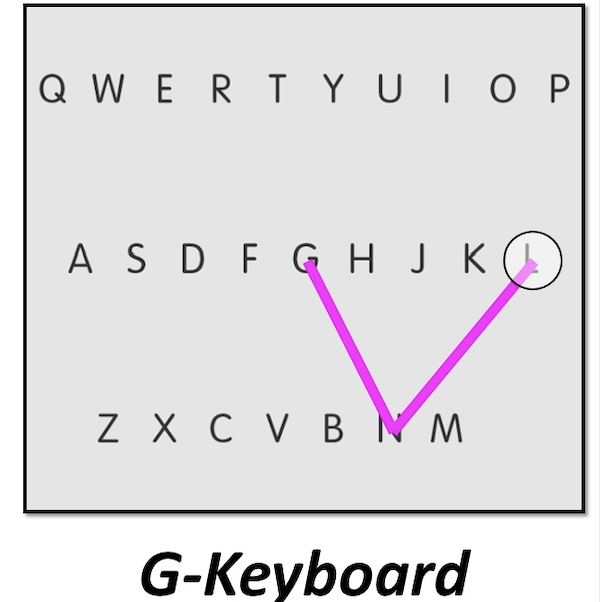

Investigating Gesture Typing for Indirect Touch

Abstract

With the development of ubiquitous computing, entering text on HMDs and smart TVs using handheld touchscreen devices (e.g., smartphone and controller) is becoming more and more attractive. In these indirect touch scenarios, the touch input surface is decoupled from the visual display. In this paper, we investigate the feasibility of gesture typing for indirect touch since keeping the finger in touch with the screen during typing makes it possible to provide continuous visual feedback, which is beneficial for increasing the input performance. We propose an improved design to address the uncertainty and inaccuracy of the first touch. Evaluation result shows that users can quickly acquire indirect gesture typing, and type 22.3 words per minute after 30 phases, which significantly outperforms previous numbers in literature. Our work provides the empirical support for leveraging gesture typing for indirect touch.

"I Bought This for Me to Look More Ordinary": A Study of Blind People Doing Online Shopping

Abstract

Online shopping, by reducing the needs for traveling, has become an essential part of lives for people with visual im- pairments. However, in HCI, research on online shopping for them has only been limited to the analysis of accessi- bility and usability issues. To develop a broader and better understanding of how visually impaired people shop online and design accordingly, we conducted a qualitative study with twenty blind people. Our study highlighted that blind people’s desire of being treated as ordinary had signifcantly shaped their online shopping practices: very attentive to the visual appearance of the goods even they themselves could not see and taking great pain to fnd and learn what commodities are visually appropriate for them. This paper reports how their trying to appear ordinary is manifested in online shopping and suggests design implications to support these practices.

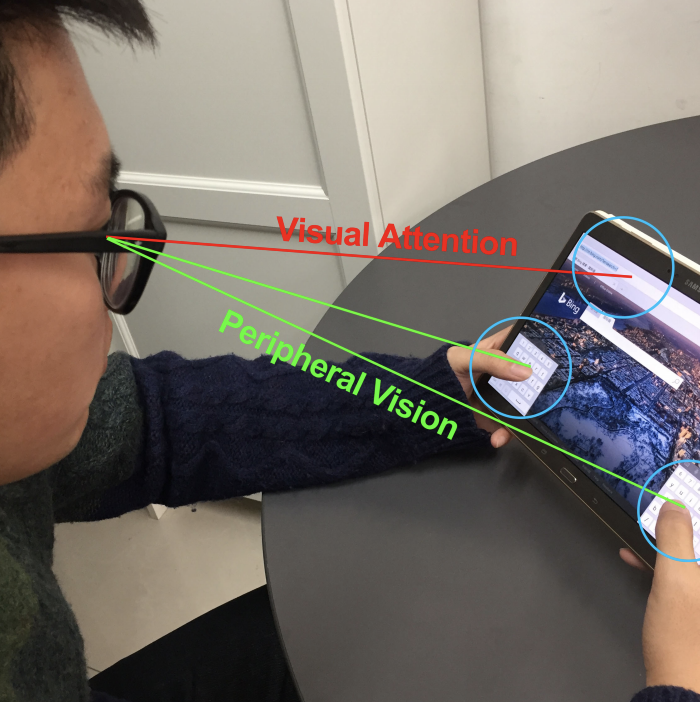

Typing on Split Keyboards with Peripheral Vision

Abstract

Split keyboards are widely used on hand-held touchscreen devices (e.g., tablets). However, typing on a split keyboard often requires eye movement and attention switching between two halves of the keyboard, which slows users down and increases fatigue. We explore peripheral typing, a superior typing mode in which a user focuses her visual attention on the output text and keeps the split keyboard in peripheral vision. Our investigation showed that peripheral typing reduced attention switching, enhanced user experience and increased overall performance (27 WPM, 28% faster) over the typical eyes-on typing mode. We also designe GlanceType, a text entry system that supported both peripheral and eyes-on typing modes for real typing scenario.

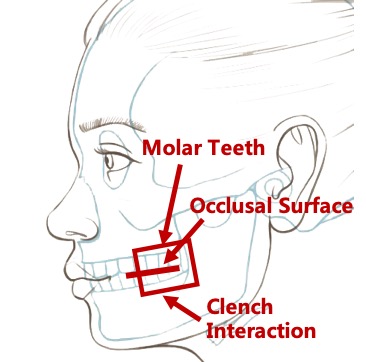

Clench Interaction: Novel Biting Input Techniques

Abstract

We propose clench interaction that leverages clenching as an actively con- trolled physiological signal that can facilitate interactions. We conducted a user study to investigate users’ ability to control their clench force. We found that users can easily discriminate three force levels, and that they can quickly con- firm actions by unclenching (quick release). We developed a design space for clench interaction based on the results and investigated the usability of the clench interface. Par- ticipants preferred the clench over baselines and indicated a willingness to use clench-based interactions. This novel technique can provide an additional input method in cases where users’ eyes or hands are busy, augment immersive experiences such as virtual/augmented reality, and assist individuals with disabilities.

HandSee: Enabling Full Hand Interaction on Smartphones with Front Camera-based Stereo Vision

Abstract

HandSee is a novel sensing technique that can capture the state and movement of the user’s hands while using smartphone. We place a prism mirror on the front camera to achieve a stereo vision of the scene above the touchscreen surface. Due to this sensing ability, HandSee enables a variety of novel interaction techniques and expands the design space for full hand interaction on smartphones.